I had a good conversation with a client on monitoring and performance.

They had one challenge they wanted to look at. If they had a long running SAP CPI processes and say one of them needed to load all details from a given store that could have 50.000 messages that each needed to be processed. How could they manage such a process?

SAP Cloud Integration is not built for data load, and where you probably will find some ELT tools like SAP Data Intelligence. If you have a normal process and then from time to time you need to perform a full load, this could help you.

There are two problems in the flow:

- How can we monitor the process and see how many entries it has processed and if it is still processing

- Can we terminate the process if it for some reason is taking too long.

Monitor progress with headers

There are some different options for monitoring the progress of a process. You have the header fields like SAP_ApplicationID or SAP_MessageType. You can update them multiple times as you work on your integration but they are only moved to the monitoring from time to time.

Some time ago, there was a challenge with long running SAP CPI processes that you could not see the status of messages processing for longer than 1 hour. I’m not sure if that is still a problem.

I would recommend the ApplicationID is used to set information about the current load so it will be easy to find the given store.

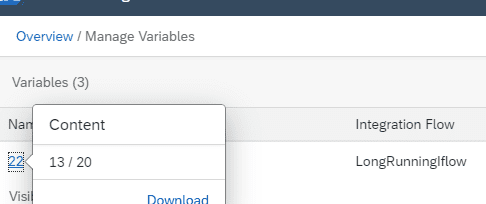

Monitor progress with Variables

Using the variables is also a good way to make sure you can see what is going on in your flow. The variables can then be updated on the fly with relevant data like number of messages out of.

This will give you this page status that will then be updated as it progresses.

You will need to evaluate which of them will work for you.

Trigger termination

Currently, there is not way you can stop an IFlow once it is triggered.

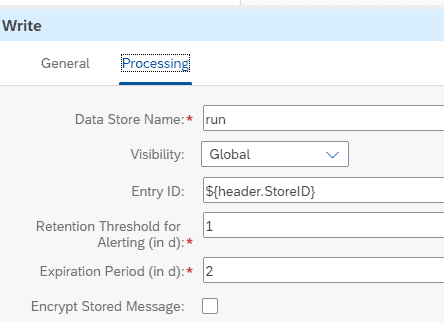

The best way I found was to write a data store entry in the beginning of the processing like the following. If you have a large payload it should probably not be persisted.

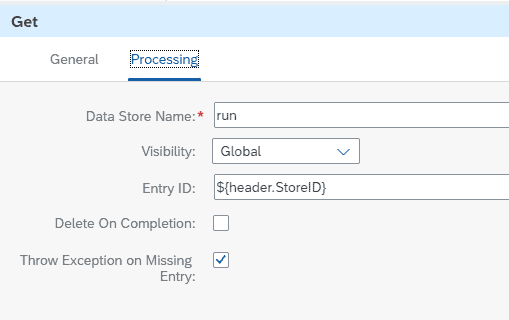

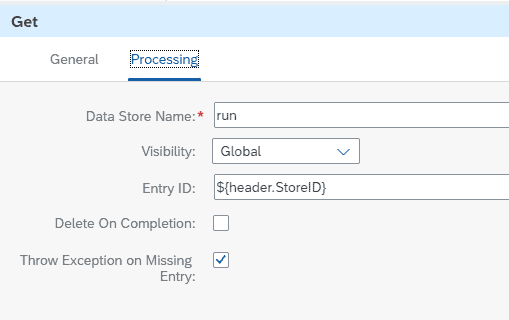

Then for each 2-5% of the processing you can read the value from the data store. And set it to “Throw Exception on Missing Entry”. Then the IFlow will stop processing if the payload is missing.

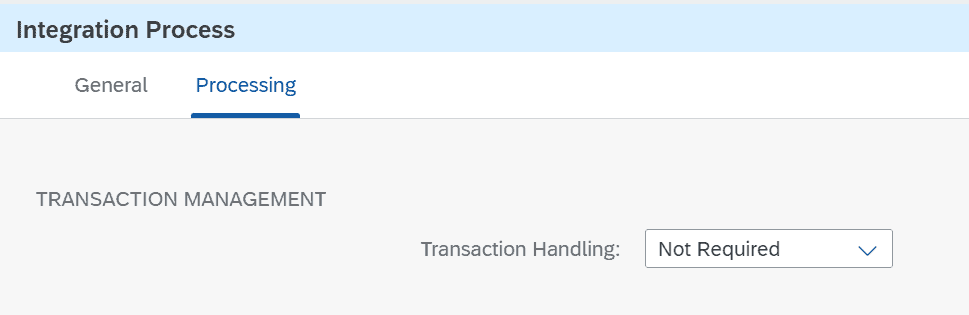

You will need to remove the JDBC transaction handling to be able to delete the payload while a process is running.

You can then from the UI or from a separate IFlow delete the payload for the message. Then you get an IFlow that is terminated.

Download the IFlow

You can fetch the sample version of the IFlow here.