Figaf Assessment Report of your Landscape

Get a great overview of your landscape with a Figaf Assessment Report

Get a great overview of your landscape with a Figaf Assessment Report

See how easy it is to use Figaf in your Migration Project

Version of Migs and improved migration report

Points on building Git workflow for cloud integration

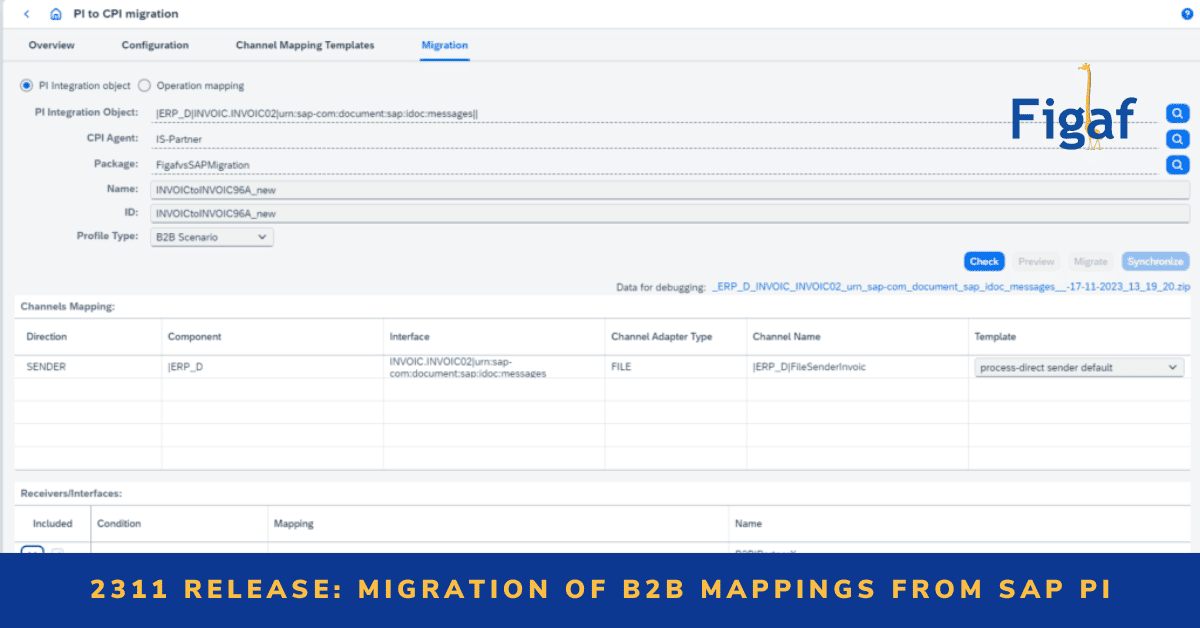

With our 2311 Release, we made even easier to migrate your B2B mappings.

See how your SAP Migration is possible with this use case.

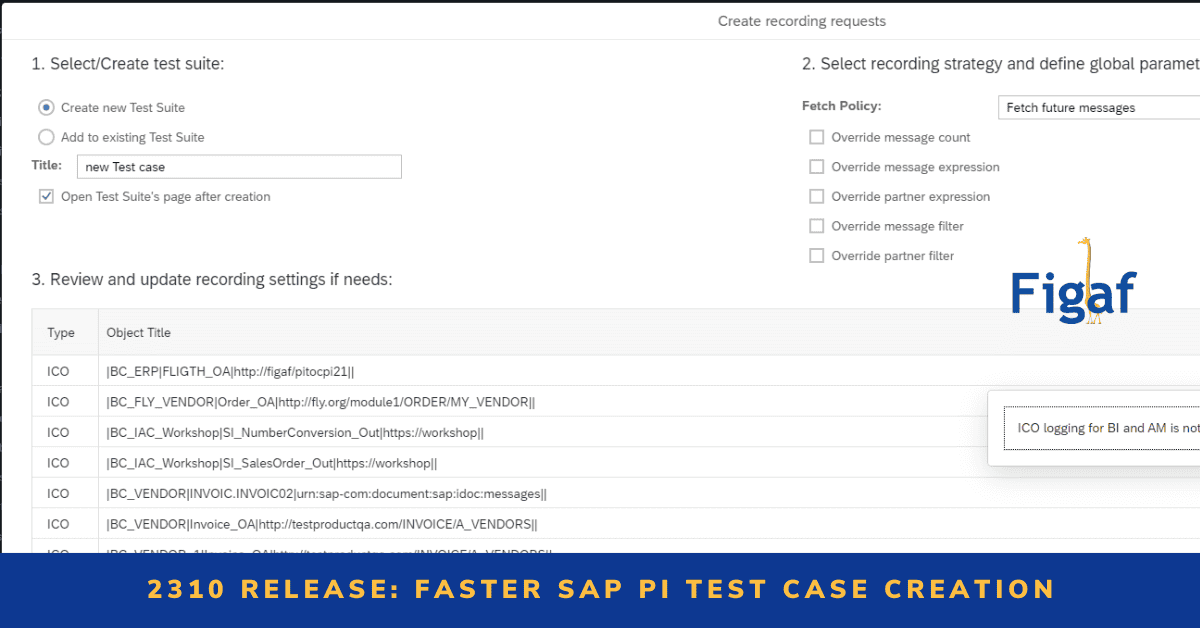

Get faster SAP PI test case creation with our 2310 Release

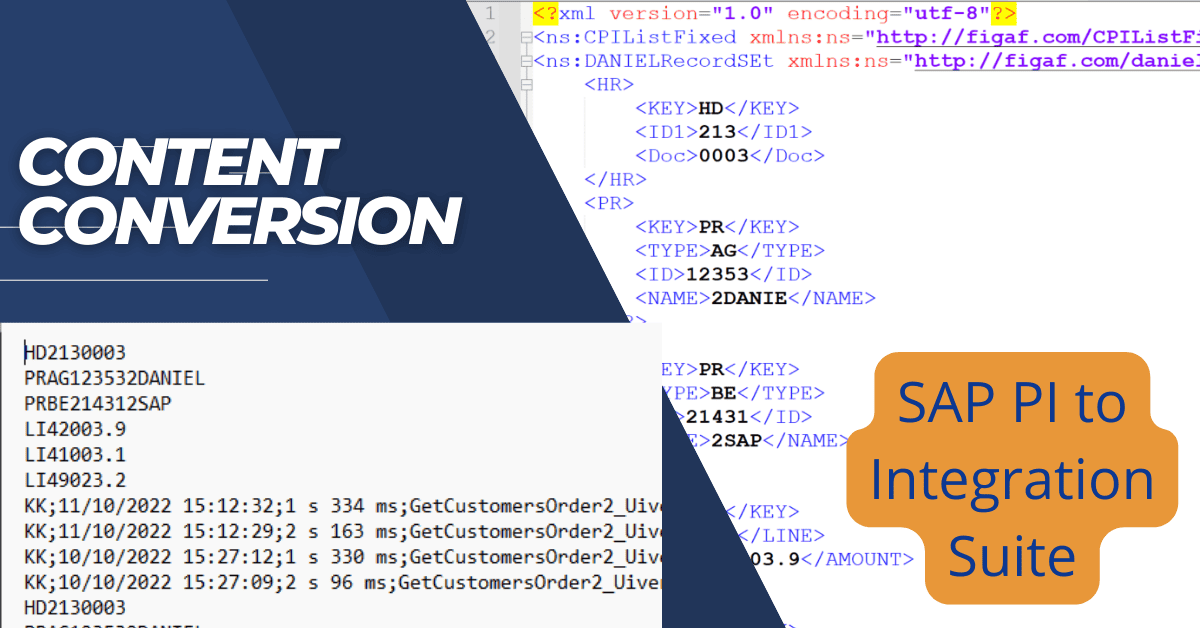

Learn about one of main features of the 2310 Release: Content Conversion

We have a special offer on our Figaf DevOps Suite, Migration Edition

We improved our September Release with better testing and PI DevOps.

In this free online Workshop we evaluate your current situation with regard to SAP Integration and Migration and how Automation could help you to achieve your goals.